QuaMa: Extending Skill Learning with Delta Dynamics Modeling for Precise and Generalizable Quantitative Manipulation

QuaMa: Extending Skill Learning with Delta Dynamics Modeling for Precise and Generalizable Quantitative ManipulationQuantitative manipulation requires robots not only to perform actions but also to achieve precise target quantities, a capability essential for applications such as cooking, laboratory automation, and industrial dispensing. Achieving both precision and generalization, however, remains challenging due to nonlinear and delayed dynamics, while existing end-to-end policies lack the robustness to handle such variability. We introduce QuaMa, a framework that extend skill learning with delta dynamics modeling: a weighted behavior cloning policy captures general skills, while a delta-based Quantity Prediction Model (QPM) forecasts future quantity changes under candidate actions. During execution, Model Predictive Action Refinement (MPAR) leverages QPM to refine the skill policy's output, ensuring accurate and reliable control. This modular design explicitly reasons about quantities while retaining compatibility with advances in imitation learning. We validate QuaMa on real-world pouring tasks with diverse containers, liquids, and granules. Results show that QuaMa consistently achieves high accuracy and robust zero-shot generalization to unseen containers, materials, and target quantities. Across all tested scenarios, the average pouring error remains within 1g, demonstrating both precision and generalization.

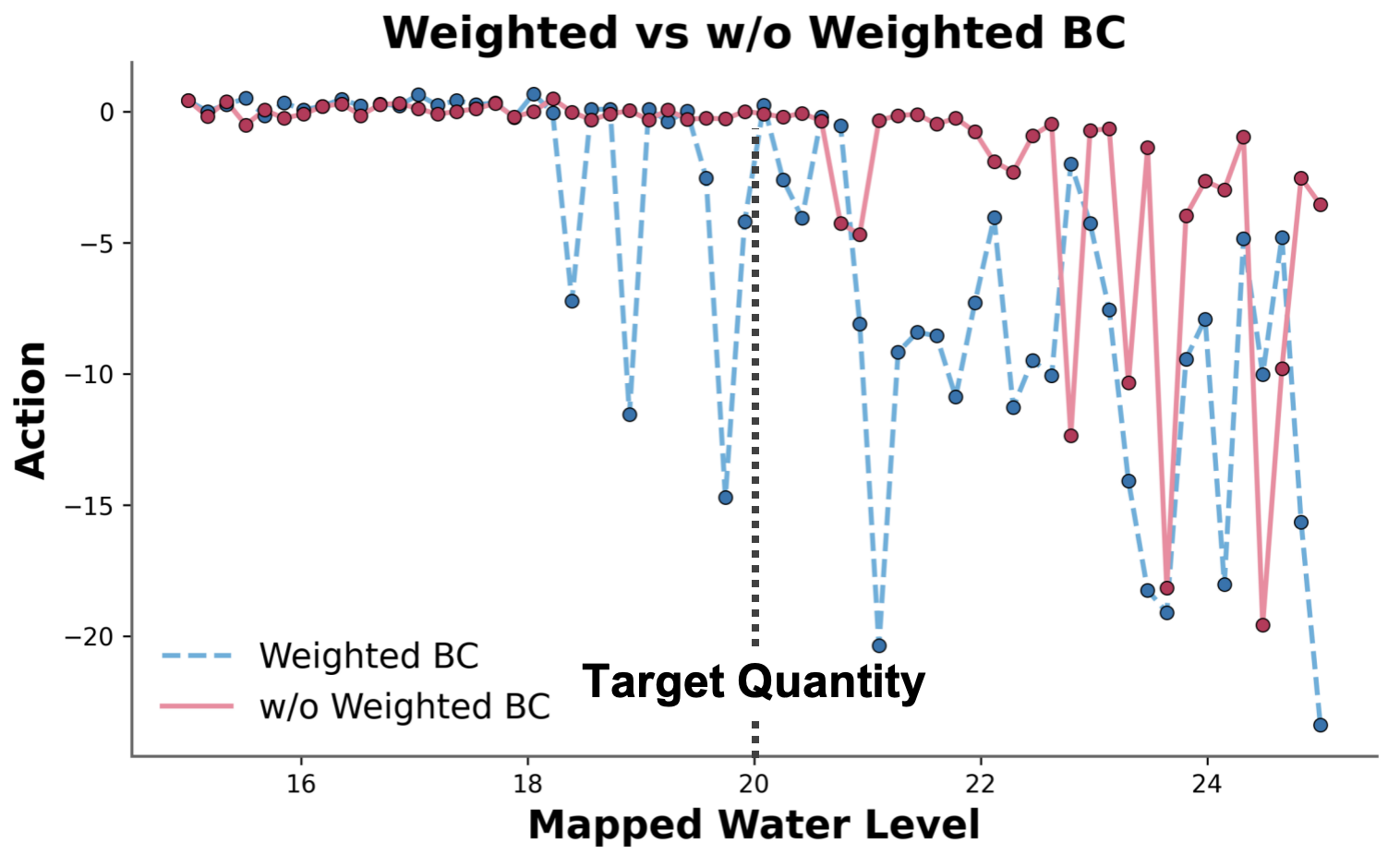

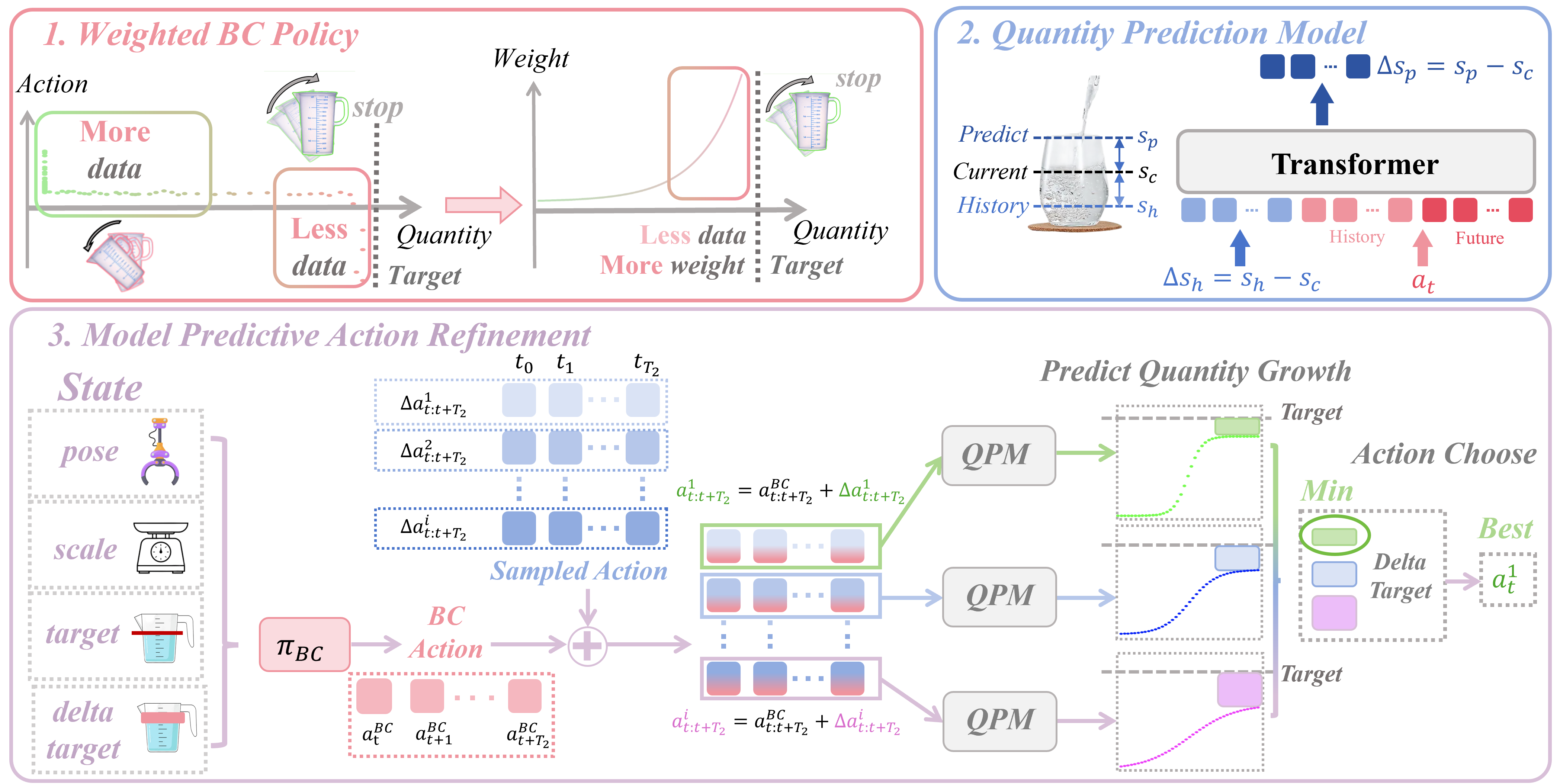

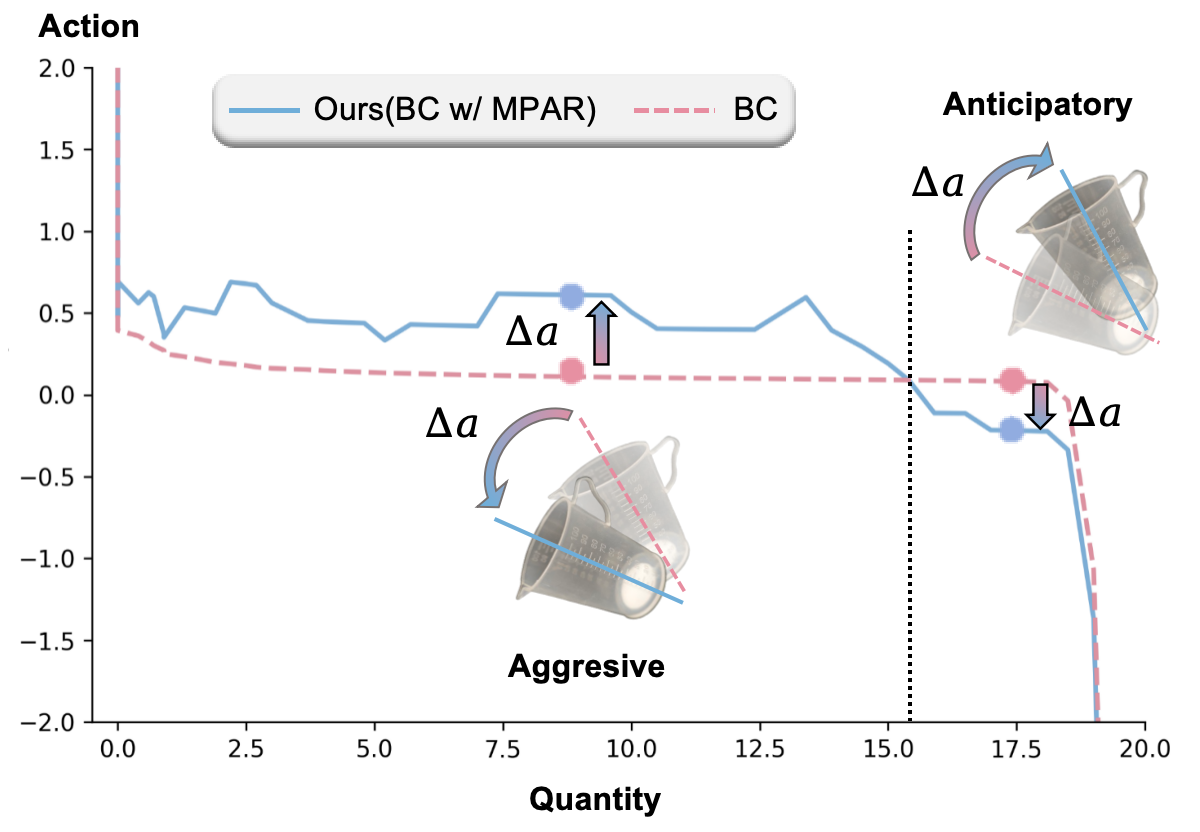

Overview of QuaMa. (1) A weighted BC policy addresses data imbalance by emphasizing actions near the target quantity. (2) A delta-based Quantity Prediction Model (QPM) predicts future quantity changes from history and candidate actions. (3) During execution, Model Predictive Action Refinement (MPAR) combines BC outputs with QPM predictions to refine actions online, enabling precise and generalizable quantitative manipulation.

Experiment Setup. We evaluate on two quantitative manipulation tasks: pouring liquids and granules.

PD controller: 21.0 (+1.0)

BC: 20.5 (+0.5)

QuaMa: 20.1 (+0.1)

PD controller: 26.5 (+6.5)

BC: 21.5 (+1.5)

QuaMa: 20.3 (+0.3)

Playing: cup + water

Playing: cup + rice

BC: 20.5 (+0.5)

QuaMa: 20.1 (+0.1)

In Distribution (goal 20): 20.3 (+0.3)

Interpolation (goal 15): 16.2 (+1.2)

Extrapolation (goal 40): 38.3 (-1.7)

In Distribution (goal 20): 20.1 (+0.1)

Interpolation (goal 15): 15.1 (+0.1)

Extrapolation (goal 40): 40.4 (+0.4)

Vanilla BC: 21.8 (+1.8)

Weight BC: 20.2 (+0.2)